The Basics

One of the most fundamental visual effects in games is the particle system. At their simplest a particle system is a collection of objects which can each be described with a single vector quantity. Their position.Even these incredibly simple particles can be moderately versatile producing effects such as clouds, fog, plants and so on. Where particles really shine though is when they're allowed to change appearance over time. The simplest way of doing this is adding one more vector quantity to each particle's description, the particle's velocity. Once each frame we add the particle's velocity to its posision then draw it. This allows particles to simulate simple explosions, fires, smoke, bullets, sparks and so on. As well as clouds, fog and so on...

How? Just change the values that are assigned to position and velocity when the particles are created.

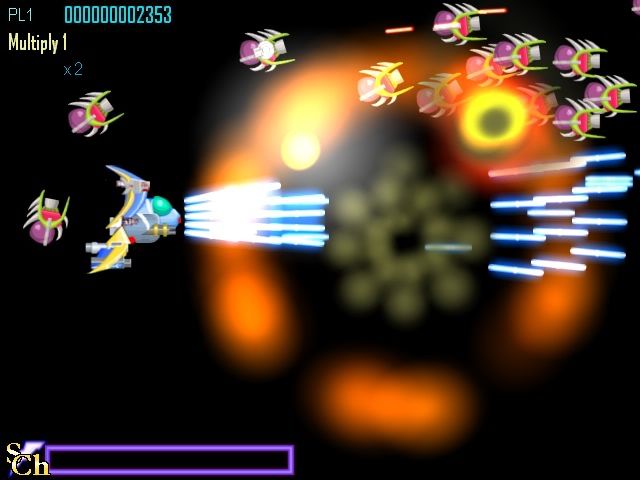

Several simple particle systems. From a previous game project of mine

In this example the blue bullets all launch from the same position but are assigned velocities that cause them to spray outward. The explosions are similar but the velocities point in all directions rather than just to the right. The smoke in the middle appars to drift behind the flame by being created slightly later and given a slower speed. This particular example has a few other variables for particles, namely colour and scale. This saves on art production since a the same clouds can be used for both smoke and fireballs.For Space Combat Sim

Relatively modern 3D hardware supports drawing particles quickly via what is known as point sprites. Instead of drawing a particle using a billboard, which requires four vertices, each particle only requires one vertex. This is great since we can pump out more particles with less data going between main memory and video memory. However this only gets us as far as drawing the very simple particles I mentioned in the beginning. What's worse is as we add variables to particles we increase the amount of data that needs to be sent to the graphics hardware quite rapidly. This gets even worse when varying a particle's properties over time... at least if we do it all on the CPU.

Besides the CPU computers and game consoles also come with another extremely powerful processor capable of chugging through obscene numbers of operations rapidly, the GPU, on the video hardware. This requires some rethinking of how we do some operations like repositioning particles since programming on a GPU is quite different from a general purpose CPU. The GPU is amazingly fast at doing floating point math and vector operations BUT it adds the caveat that changing input data (i.e. changing the vertices that have been passed from the CPU) isn't feasible. I won't go into a huge explanation why but this will help. The gist of it is that vertices are processed in something like a water pipe. Data can only flow in one direction. You can change it as it flows from one process to another but you can't reverse the flow. (there are ways around this but they're painful and I'm not going to go into them)

So we can't apply velocity to a particle by simply adding to its position repeatedly. What can we do? If you remember your kinematic equations an object's position can be expressed in relation to time with the equasion Pt = P0+V*t

Getting the initial position (P0) of a particle to the GPU is easy since that's simply the vertex position for the point sprite. Getting the time and velocity to the GPU are fairly simple but generally require a bit more thought since this is where the particle systems can be made extremely fast and flexible at the same time. I'll leave that for next time however since this entry is already getting rather long.

No comments:

Post a Comment